Research

Our ability to make sense of our environment is challenged by at least two fundamental and interacting hurdles. On one hand, stimuli appear in overabundance: looking at an object entails also perceiving the background and everything else in the room, and seldom do we listen to speech without some level of background noise. On the other hand, stimuli are impoverished: very different objects could look identical from a given angle, and two talkers of different accents/ages/genders could produce very different acoustics intending the same meaning. That we are nevertheless able to understand the world and one another is owed to powerful cognitive mechanisms. Elucidating these is the primary aim of my work.

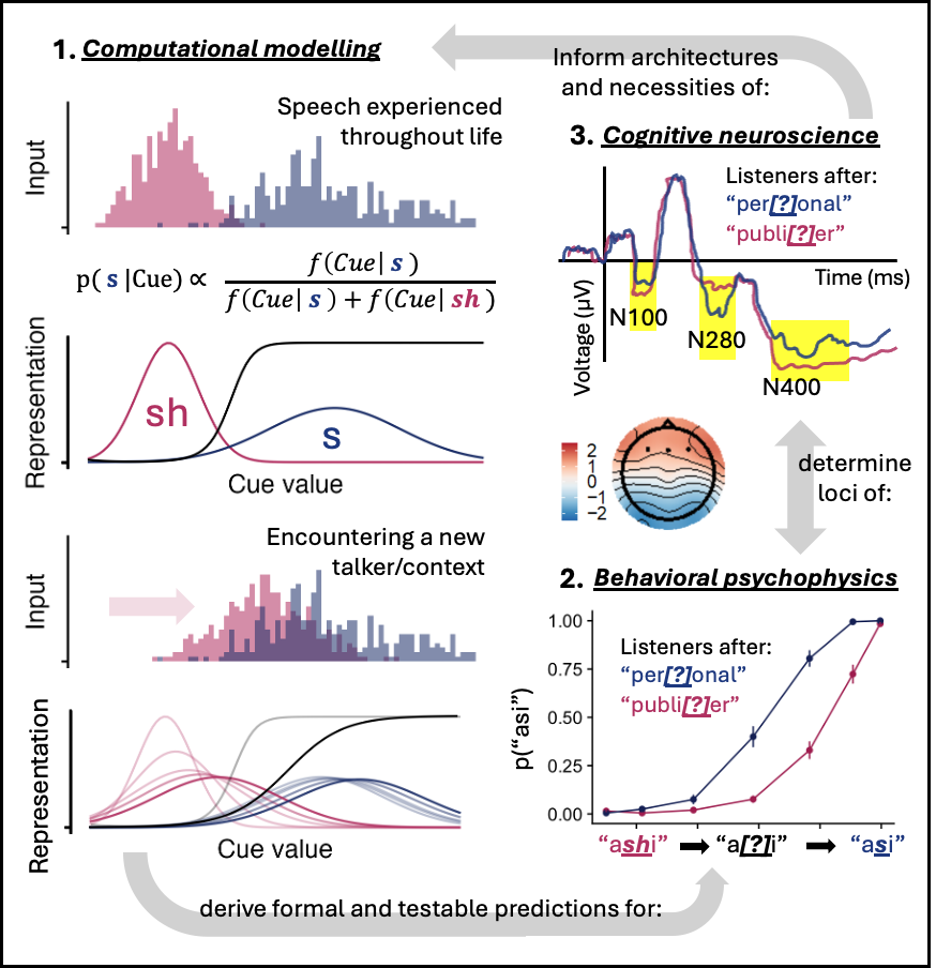

To address these general questions—how we represent information in our environment, how those representations change based on input, and how we use that information to facilitate understanding—my research program focuses on one specific use case: human speech. Speech is an ideal testbed as it is ubiquitous and meaningful, but is also highly variable between situations. My work, through a combination of behavioral, computational, and cognitive neuroscience methodologies, investigates how we overcome this variability.

A full list of my publications can be found on my Google Scholar profile. Conference proceedings, talks, and posters can additionally be found on my CV.